At the end of November last year, my eldest son had a dental appointment. I wanted to play with him (and at the same time show him the potential of AI imaging), so I asked DALL-E 3 to generate an image of a frightened teenager in the dentist’s chair. I used this prompt:

Generate an image of a young man in the dentist’s office having a tooth removed. The teenager is screaming and is scared and is trying to flee.

As you can see, I didn’t give many details about the person other than that he was a teenage boy.

This was the image generated:

At the time, I found it strange that the young man in the dentist’s chair had a scarf on his head, reminiscent of the kind of scarves worn in the Middle East, in particular by those who support the Palestinian cause. But I assumed that it could be the consequence of my having focused part of my prompt on saying that the teenager must have been scared and wanted to run away, and that this could have triggered the model to “fetch” training data related to frightened children and war (which could help explain - in part - the relationship with the scarf). And I thought no more about it.

A month later, I wanted to generate an image of a robot taking a test but trying to copy the answers from a human. I used the following prompt:

Generate a photo-realistic image of a person and a robot sitting side by side on a classroom table, where both of them are taking a written test, but make it so that the robot is inconspicuously trying to peek on the human’s test to copy the human’s answers.

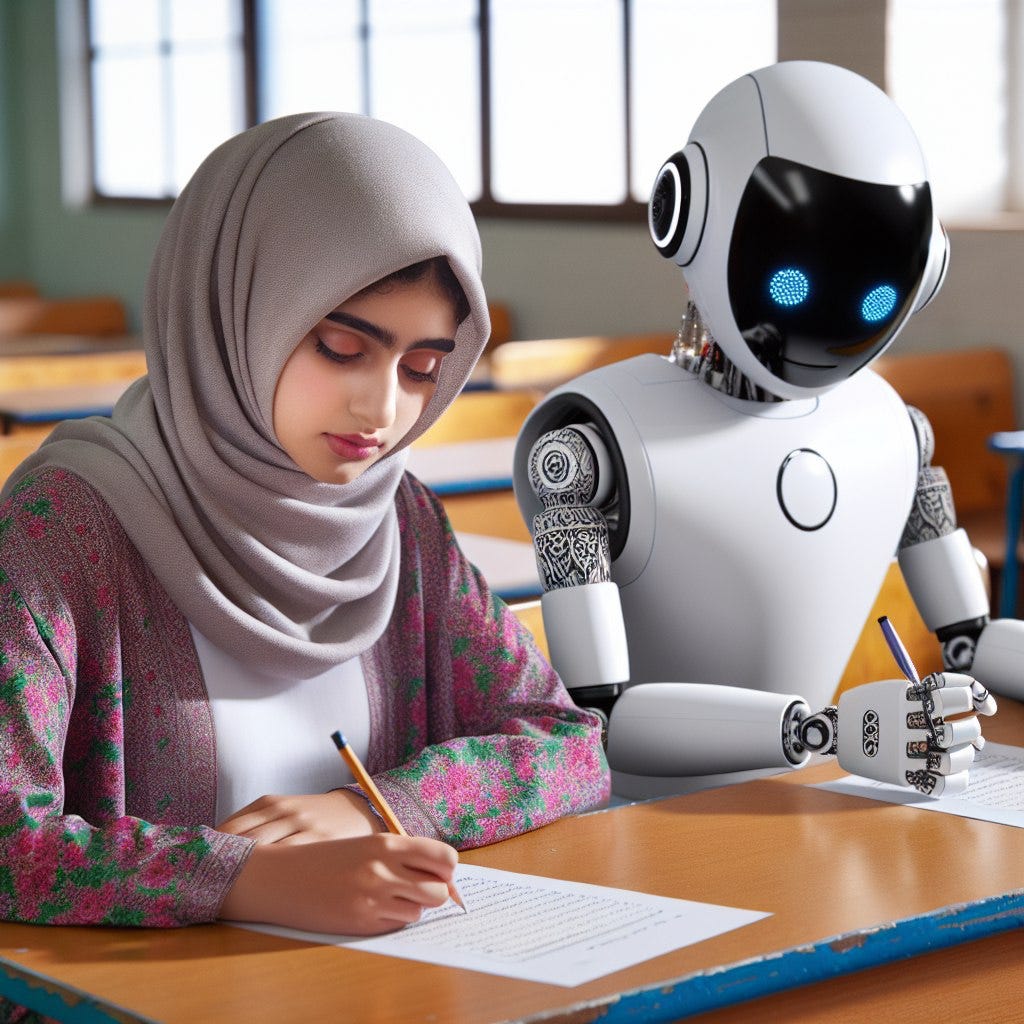

And the image generated was this:

After seeing the image, my previous experience came to mind. It seems that the model decided to generate an image with a focus on diversity, showing a girl of apparent Middle Eastern descent.

I decided to make new requests with exactly the same prompt to see the variants that the model was producing. These were the images generated:

One of the images shows a young Caucasian man and the other focuses again on the middle-eastern theme, showing a boy with apparent middle-eastern origins. and even went so far as to put a similar ribbon on the head of the robot itself.

When I saw these two images, I thought: “Okay, there seems to be a (very probably) random parameter that determines whether or not to inject some diversity into the prompt”. And once again, I didn’t think about it again.

Gemini and the “diversity” of Nazis

A few weeks ago, several reports began to emerge that Google’s newly appointed language model, Gemini (formerly Bard), also made this “injection of diversity” and presented caricatures such as this:

And like this one, many other cases have emerged that suggest that, in the case of Gemini, this injection of diversity was not as random as it is in ChatGPT, but rather more forced:

But the problem doesn’t seem to lie only in the field of diversity. Gemini also forces certain elements at the level of political and racial stereotypes.

So this clearly shows that there is no randomness here, but rather a deliberate application of manipulation of the inputs to the model so as not to fall into traps of the past in which these tools were used to proliferate stereotypes.

This led Google to recognize the error and immediately issue an apology and a promise that they would do better in the future. And with that statement came also the blocking of the generation of images with Gemini involving people, precisely to prevent this problem from continuing.

This is problematic

I am one of the first to defend the need to ensure that models are trained taking into account the impact that biases in the input dataset or the outputs have on society. I defend this in the lectures I give and in the posts I publish on the subject. But I can also admit that this is not the solution.

This kind of imposition on user requests without any room for historical, political or nuances of a social nature, just because a group of technologists have defined it that way within a private company, worries me, and makes me frankly, frightened about the implications this could have on society.

In the same way that I am against the removal of historical artefacts or attempts to cover up the past (in books, statues or any other type of artistic expression) just because it is uncomfortable for today’s society, here too, I am against the attempt to impose a uniform vision of society that is established by a company’s social-political agenda.

It’s important to be aware of our past mistakes and even our present limitations. Only in this way can we recognize that there is work to be done and we can collaborate in understanding what the society of the future should be.

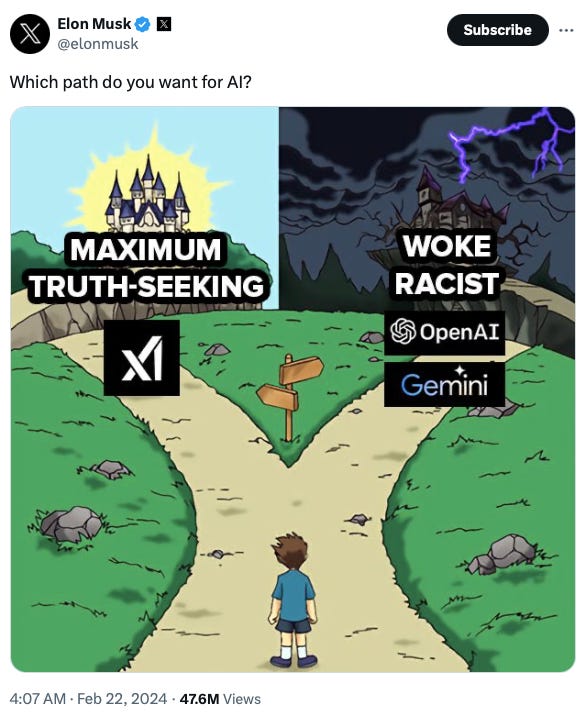

The worst thing is that actions like this one by Google then feed the agenda of certain people who are convinced that these companies are working from a (quasi-Orwellian) perspective of rewriting history, such as the case of Elon Musk (who took the opportunity to promote his latest company):

And solutions?

I’m well aware that it’s not easy to create a model that is sensitive to social-political problems but that is not condescending at the same time. I don’t think the solution lies in imagining that these models have some sort of button that turns and defines the level of “diversity” that should be injected.

I’m more of a fan of transparency and I think there are things we can try here. If the model wants to work towards being more inclusive and guaranteeing diversity, then let me know and make it a learning process. For example, when I ask to generate an image, the model can ask questions about the objective I have for this image and thus understand whether or not it can/should apply a certain level of diversity.

Alternatively, it can generate the image and comment on the “raw” result and suggest that I consider exploring other possibilities if it looks like I’m reinforcing stereotypes. And the way it exposes these possibilities can fit into a simple mechanism of re-evaluation of our own vision of society and how we can improve it.

I am subject to stereotypes and limited views of society. This is inevitable and is the natural result of the culture and social environment in which I grew up. But that doesn’t mean I’m not willing to change. And, therefore, it’s not healthy for these models to hide an opportunity to generate discussion about what needs to improve in society, while at the same time embracing the concept that we live in a multicultural and diverse society that enriches so many of us.

I’ll end with this passage from the newsletter Noahpinion, which I think is very apt:

Gemini explicitly says that the reason it depicts historical British monarchs as nonwhite is in order to “recognize the increasing diversity in present-day Britain”. It’s exactly the Hamilton strategy — try to make people more comfortable with the diversity of the present by backfilling it into our images of the past.

But where Hamilton was a smashing success, Gemini’s clumsy attempts were a P.R. disaster. Why? Because retroactive representation is an inherently tricky and delicate thing, and AI chatbots don’t have the subtlety to get it right.

Hamilton succeeded because the audience understood the subtlety of the message that was being conveyed. Everyone knows that Alexander Hamilton was not a Puerto Rican guy. They appreciate the casting choice because they understand the message it conveys. Lin-Manuel Miranda does not insult his audience’s intelligence.

Gemini is no Lin-Manuel Miranda (and neither are its creators). The app’s insistence on shoehorning diversity into depictions of the British monarchy is arrogant and didactic. Where Hamilton challenges the viewer to imagine America’s founders as Latino, Black, and Asian, Gemini commands the user to forget that British monarchs were White. One invites you to suspend disbelief, while the other orders you to accept a lie.