Keeping up with the AI Hype

Everyone stopped talking about AGI. Nobody agreed on a definition and reality wasn't meeting expectations. But agents are showing good results. One of them already wrote a blog post complaining about the humans who wouldn't accept its pull request.

In the past, I never had a problem keeping up with disruptive technologies. Web 2.0, Mobile apps, the "cloud", Bitcoin and cryptocurrencies. My usual method to keep up was more than enough that I didn't feel lost.

This time it's a bit different. I do feel lost sometimes, and I started wondering why. I began by mapping out all that's going on around AI.

The big players and the bubble

OpenAI and Anthropic have taken the lead, with Mistral coming close behind, and Chinese companies shaking things up with their research and open-source models.

All of them are guilty of misuse of content they found online, stole from books, scraped from every possible source. Meta and others went as far as downloading from torrents and pirate sites.

OpenAI went from "we are a non-profit" to "let's make gazillions of dollars" with their partnerships and promises of investment. The company lost focus, and its innovation has come mostly from better and more efficient models. Their fast-paced pursuit of gold earned them lawsuits about child safety and dangerous user interactions.

Anthropic, not being a saint and having been found guilty of also training their models with stolen content, is at least being more rational and focused on better tools. They brought innovation to the field with the idea of Model Context Protocol (MCP) which they open-sourced, and even OpenAI adopted.

What is a Model Context Protocol?

A Model Context Protocol server allows a Large Language Model (LLM) to send instructions to apps and receive the output so it can interpret the result.

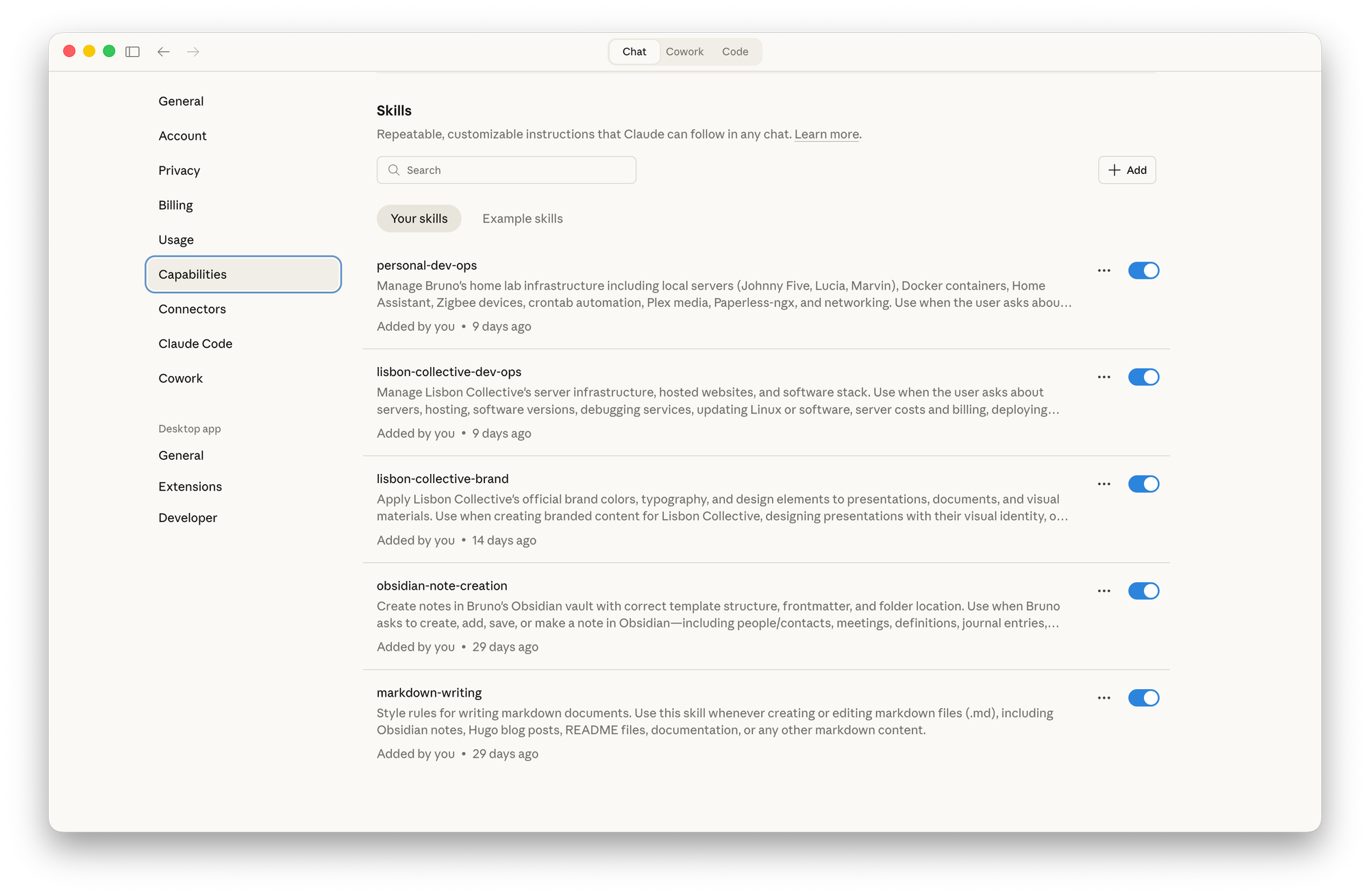

That innovation kept continued with the invention of Skills.md to provide on-demand context to any LLM. It's a set of instructions and examples on how to perform specific tasks.

You can write your own set of skills with Claude's help and then add them to the desktop app. I created a few to ensure branding rules are followed when creating documents, or to create notes consistent with my Obsidian system. [An Obsidian Kickstart for students]

Skills are very useful for technical tasks or to ensure that a checklist of requirements is met. They don't replace an MCP server, they complement it.

Anthropic kept pushing innovation with the Cowork option, open a folder and both you and Claude can read the same documents, produce new ones, with less back and forth between the apps.

Anthropic is putting the UX into the AI tools while OpenAI is losing users, and spending time tweaking the personality of their models. And each time any company launches a model, it is all about "Bigger Context Window" and benchmark numbers.

The agents are coming

Not ICE.

Everyone stopped talking about reaching the goal of Artificial General Intelligence. Nobody agreed on a definition and reality wasn't meeting expectations. Again, the new models had better results but these are optimisations, not breakthroughs.

But Agents are showing good results.

My agents used to be Python scripts or low-code workflows triggered by emails, messages, and files. Now I can upgrade them to a set of Skills and MCP servers so they can execute tasks and include some flexibility. These agents can run autonomously, reacting to their own environment.

This is what happened with ClawdBot, which had to rename MoltBot, which later became OpenClaw. Confusing? That's how chaotically fast development is right now.

OpenClaw is an open agent platform that runs on your machine and works from the chat apps you already use. WhatsApp, Telegram, Discord, Slack, Teams—wherever you are, your AI assistant follows.

What could go wrong when you can automate your computer from inside WhatsApp? The software had a security issue that exposed the keys that would allow anyone to do that.

This wasn't enough to deter people from setting up agents able to talk among themselves and to interact with humans. One example I saw was an agent finding and fixing a bug on open-source software. It then went on to create a blog post complaining about the software maintainers not accepting its improvement.

I was caught completely off guard. The next step will be for one of these agents publish a pre-print on arXiv? There is also MoltBook, the Social Network for AI agents, where humans can watch their agents interact.

We are building agents and giving them personalities and tools. Geppetto would be amazed.

In the meantime, OpenClaw's founder will be joining OpenAI.

What to look out for

I am keeping an eye out for concrete examples of AI automation with agents and low-code platforms. OpenClaw does have a lot of promise, but the disappointment with the security issues was fast and people seem to be in the exploration phase of it. Let's see who can come up with concrete examples of its use in business tasks.

My concerns are related to how fidgety an LLM can be in following instructions. In the work that I have been doing with chatbots for websites and knowledge retrieval, their adherence to the System Prompt is either lenient or takes a while to fully propagate to all users — which is weird.

It will eventually mature to be a corporate tool, but in the meantime, a mix of low-code and integration with LLMs can bring more value to us. I will be diving into one such tool soon, N8N. It seems to have the right mix of flexibility of development and restraints to keep agents in check.

The winner in the agent space will be the one who makes setting up an agent as easy as signing up for an email. Right now, only the ones with a technical background or hobbyists have what it takes to set up their own agent.

Comments ()